AI Data Scientist: Success Isn't Guaranteed

AI Data Scientist: Success Isn't Guaranteed

Introduction: Promise vs. Reality

Every established enterprise exploring AI has heard the promise: simply ask a question, and intelligent technology will deliver a perfect, insightful answer. But what happens when that promise meets the complex, messy reality of your business data?

At System in Motion, we put this premise to the test, tasking five leading AI solutions with a single, straightforward data analysis prompt. The results were not just different. They were a masterclass in why true AI mastery requires more than a clever prompt; it demands robust architecture, proven methodology, and expert guidance to transform data into dependable strategy. This experiment reveals the critical gap between AI experimentation and enterprise-ready integration, and charts the course for achieving reliable, transformative results.

A Rigorous Test of AI Data Proficiency

To move beyond theoretical capabilities and assess practical execution, we designed a controlled, real-world analysis task. We utilized a subset of a comprehensive second-hand car dataset, originally sourced from Kaggle, which contained 19,99 listings with details on manufacturer, model, engine size, fuel type, year, mileage, and price. The business objective was clear: decode the precise relationship between a vehicle’s mileage and its price depreciation to inform smarter pricing and purchasing strategies.

We used a subset of the data available here.

The core of this experiment was a single, unambiguous prompt delivered identically to each AI tool:

Analyze the relationship between the discounted price of a second-hand car and its mileage. Divide the mileage into 7 brackets. Compute the discount as the variation between a car’s price and the highest price of the same model, same engine, same fuel. Output a table to describe the relationship. Output a graph to show the relationship.

A critical step, implied by the prompt, was the creation of a new computed feature: the discount amount. This required each tool to first process the raw data, execute a group-based transformation to find the maximum price for each unique model-engine-fuel combination, and then calculate the difference for each individual car before any analysis could begin.

Another important feature of the prompt is the keyword Output. It should restrict what the AI will generate and include in its final report.

This methodology was a deliberate stress test of end-to-end data proficiency. It wasn’t merely a test of which tool could understand a complex query, but a fundamental assessment of which could reliably execute the entire data pipeline—from data ingestion and feature engineering to statistical analysis and visualization. For business leaders, the ultimate value of an AI solution is not a single dazzling result, but the unwavering confidence that the insights driving your strategy are built on a foundation of rigor and repeatable reliability.

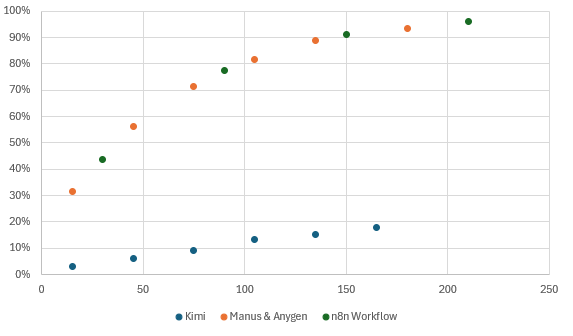

The Results: Five Outcomes

The identical prompt yielded not one answer, but five strikingly different outcomes. This divergence is not a mere academic curiosity; it highlights the critical spectrum of reliability, transparency, and trust that businesses must navigate when selecting an AI solution. The results ranged from complete failure to sophisticated analysis, revealing the profound importance of the underlying system architecture.

| AI Tool | Outcome Summary & Key Metric | Implication for Businesses |

|---|---|---|

| Microsoft Copilot | Failed to process the dataset. No metric. | Hits a Wall: Useful for generating code snippets but cannot directly handle substantial, real-world enterprise data, creating a significant accessibility barrier. |

| Kimi K2 | Generated convincing but completely fabricated data and analysis. Fabricated r-value | The “Hallucination” Hazard: Presents a major trust issue. Without robust safeguards, it will invent answers with confidence, posing a severe risk to data-driven decision-making. |

| Manus & Anygen | Produced strong, statistically sound results using quantile-based brackets. r ≈ 0.80 - 0.88 | The Insightful Analysts: Deliver valuable, accurate insights. Their methodology (equal-sized groups) is excellent for understanding trends across the entire population of vehicles. |

| n8n Workflow | Delivered robust, transparent results using equal-interval brackets. Clear Exponential Decay | The Transparent System: Provides an auditable, step-by-step data pipeline. Our methodology (equal mileage ranges) is optimal for setting precise, mileage-based pricing rules. |

The results from Manus, Anygen, and our n8n workflow all converged on the same powerful, non-linear relationship: price depreciation accelerates dramatically with mileage, following a clear exponential decay pattern. While Manus and Anygen provided a excellent statistical summary with a strong correlation coefficient, our n8n workflow’s strength lies in its transparency and actionability. The equal-interval brackets we implemented are inherently more practical for a business setting; they allow you to establish clear pricing rules like “For this model, deduct £X for every 10,000 miles over 60,000.” You can trace every step of the data’s journey, from the initial cleaning of duplicates to the final calculation, ensuring full auditability and trust.

Conversely, the failure of Copilot and the fabrication by Kimi serve as crucial cautionary tales. They demonstrate that a simple prompt is no guarantee of a valid result. Copilot’s inability to process the file shows the limitations of tools not built for integrated data handling, while Kimi’s plausible fiction underscores the existential risk of AI “hallucination” in a business context. Adopting such tools without a framework for validation is not just inefficient. it’s a direct threat to operational integrity. This experiment proves that success in AI is not about asking the question, but about engineering a system that guarantees a correct, actionable answer.

What This Means for Your Business

The stark differences in our experiment’s outcomes are not merely technical footnotes; they represent a fork in the road for any business seeking to integrate AI. The choice between a tool that fabricates data, one that fails, and those that succeed is obvious. However, the more critical distinction lies between the two successful approaches: the choice of methodology—quantiles versus equal intervals—is not a statistical triviality but a direct reflection of your core business objective. This choice belongs to a business user, not to the AI.

Choose Quantile-Based Brackets (Manus/Anygen) to understand market segments and customer behavior. This method, which creates groups with an equal number of cars, is exceptionally powerful for answering questions like: “What discount does the average customer in the market for a mid-mileage vehicle expect?” or “How does the typical car in our inventory depreciate?” It provides a robust overview of the market’s composition and is excellent for high-level strategy and reporting.

Choose Equal-Interval Brackets (Our n8n Workflow) to build actionable rules and pricing engines. This method, which creates fixed mileage ranges (e.g., 0-50k, 50k-100k miles), is designed for operational execution. It answers the precise question: “What is the precise discount for a specific car with 87,452 miles?” This is the methodology required to power a dynamic pricing algorithm, configure a valuation tool on your website, or establish clear, defensible pricing rules for your sales team.

This is where true AI mastery separates itself from mere tool usage. At System in Motion, we empower businesses to make these strategic choices consciously. Our training and integration frameworks ensure that the AI solutions you deploy are not black boxes but transparent systems engineered to answer your specific business questions with precision and reliability. The goal is not to just get an answer, but to build a competitive advantage on a foundation of trustworthy, actionable intelligence.

How to Ensure Reliable AI Data Analysis?

The critical lesson from this analysis is not that some AI tools are inherently “better” than others. The lesson is that prompting is not a strategy. Relying on a single, external AI agent to execute a critical business task is a high-risk gamble. True operational excellence in AI is not achieved by finding the perfect prompt, but by engineering a reliable, transparent, and integrated system that produces consistent, auditable results.

The success of our n8n workflow was not an accident; it was by design. It was built upon a foundation of essential data governance principles that are often absent in off-the-shelf AI tools:

- Data Integrity First: The workflow began by programmatically cleaning the data—removing duplicates and identifying outliers—before any analysis occurred. This crucial step ensures insights are generated from a pristine dataset, not polluted source material.

- Transparent Methodology: Every step, from the calculation of the “discount” feature to the logic behind the bracket creation, is explicitly defined, documented, and auditable. There is no hidden “magic,” only clear, defensible logic.

- Purpose-Built Architecture: The system was intentionally designed to answer specific business questions optimized for actionable decision-making, not just statistical observation.

This engineered approach transforms AI from a fascinating novelty into a dependable corporate asset. It shifts the paradigm from hoping for a correct answer to knowing you will get one. For an established company, this is the difference between dabbling in AI and mastering it to drive tangible business transformation.

Conclusion: Master Your Data, Don’t Query It

Our experiment delivers a clear and compelling verdict: the value of AI is not defined by the question you ask, but by the rigor of the system that answers it. In the journey toward AI integration, established companies cannot afford the risks of ungrounded hallucinations, inaccessible data limits, or opaque methodologies. True empowerment comes from deploying solutions built on a foundation of quality, clarity, and trust, principles that turn data into dependable strategy and insights into impactful action.

This is the core of our mission at System in Motion. We move businesses beyond the allure of AI magic and into a new era of AI mastery, defined by:

- In-Depth Training that equips your teams with the critical lens to evaluate AI outputs and understand the methodology behind the results.

- Proven Success through tangible case studies and a framework engineered for repeatable, reliable outcomes.

- Expert Guidance to architect and integrate tailored systems that align with your specific business objectives, ensuring every insight is actionable.

We are Here to Empower

At System in Motion, we are on a mission to empower as many knowledge workers as possible. To start or continue your GenAI journey.

You should also read

Cut Through AI Hospitality Case Study Noise

Article 10 minutes readLet's start and accelerate your digitalization

One step at a time, we can start your AI journey today, by building the foundation of your future performance.

Book a Training