GPT Hallucinations: Why They Happen and How to Avoid Them

GPT Hallucinations: Why They Happen and How to Avoid Them

In recent months, language models like GPT have revolutionized natural language processing, becoming a staple in business conversations. These models can generate text so coherent it often mirrors human writing. However, new technologies also bring potential risks and pitfalls.

One significant risk of GPT and similar language models is the phenomenon of “hallucinations”, instances where the model generates text that is factually incorrect or problematic. We’ll explore why these hallucinations occur and how knowledge workers can mitigate them during AI training.

Why do GPT Hallucinations Occur?

Hallucinations in GPT models occur due to their training process. The models are fed vast amounts of text data, learning to predict subsequent words based on given context. This process involves training the model on extensive text corpora, like the internet or a large book collection.

However, the model doesn’t store knowledge but a statistical representation of language. This representation can generate factual truths, unverified or factually incorrect information, leading to hallucinations.

General Knowledge vs Specific Knowledge

GPT and similar models do not represent all knowledge equally. General knowledge, such as basic world facts or common idioms, is safer for these models to generate. This is due to the vast resources in the training set, ensuring a good statistical representation. However, more specific knowledge, like details about a specific industry or scientific field, may lack critical mass in the source data. This leads to a poor statistical representation, increasing the chance of generating hallucinations.

The model might generate text that seems plausible to a layperson but may not be accurate for someone with specialized knowledge. Thus, caution is advised when using GPT-3 for tasks requiring high-level domain expertise.

Avoid Generating Hallucinations

Knowledge workers using GPT or similar models can take several steps to avoid hallucinations:

2 Tips

-

Incorporate domain expertise: Engage subject matter experts in tasks requiring specialized knowledge. They can help identify and correct inaccuracies or misconceptions the model might generate.

-

Evaluate your output: It’s crucial to carefully evaluate your model’s output. Look for instances where the text seems inaccurate or misleading, analyze the root cause of the inaccuracy, and design a strategy.

4 Strategies

-

All-inclusive prompts: If a prompt includes all necessary information for a response, GPT doesn’t need to create information, reducing the risk of hallucination. A well-designed prompt will achieve this.

-

Extensive context: A common mistake in prompts is lack of context. Clear, unambiguous context reduces hallucinations. Increase context detail by explaining the task to a colleague and including all details in the prompt.

-

General knowledge only: Limiting GPT output to general knowledge helps avoid hallucination. However, the boundary between general and specific knowledge is often blurred. A thought experiment to determine what constitutes general knowledge is to estimate how many websites cover the topic. A large estimate indicates general knowledge.

-

Prompt library: Store successful prompts in a prompt library for efficiency, safety, and repeatability.

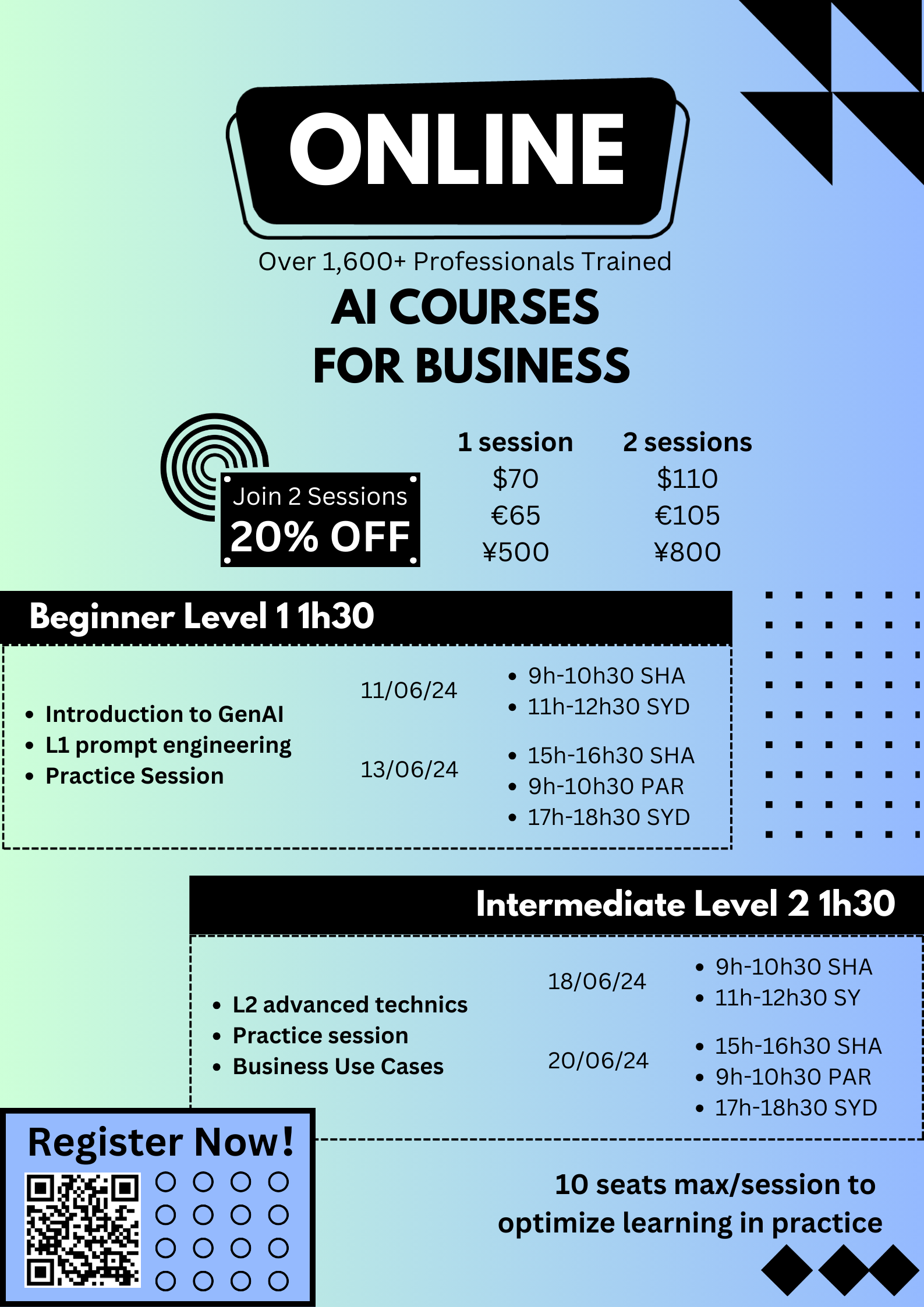

Train Yourself

Don’t let the complexity of AI models like GPT deter you from maximizing their potential. Our comprehensive training program is designed to help you navigate these models with confidence and precision. We’ll guide you through understanding GPT hallucinations and their prevention, creating effective prompts, and leveraging domain expertise. Make the investment in your professional development today and unlock the power of AI in your work. Subscribe to our newsletter to receive updates on our practical use cases and our upcoming trainings.

In conclusion, GPT hallucinations pose a real risk when working with language models. However, with mindfulness of potential errors, appropriate steps to avoid them, and careful evaluation, GPT models can be powerful tools for enhancing productivity.

We are Here to Help

At System in Motion, we are committed to building long-term solutions and solid foundations for your Information System. We can help you optimize your Information System, generating value for your business. Contact us for any inquiry.

Let's start and accelerate your digitalization

One project at a time, we can start your digitalization today, by building the foundation of your future strength.

Book a Demo